Despite the success of deep learning, tree-based machine learning is still competitive in many application domains. Recent literature shows that, e.g. on tabular data, tree-based methods still outperform neural networks, while being faster, easier to apply and requiring less tuning.

- Speaker

- Date

- Thursday 15 May 2025, 12:00 - 13:00

- Type

- Seminar

- Room

- 1.16

- Building

- Langeveld Building

- Location

- Campus Woudestein

However, this research focuses exclusively on discriminative models and their prediction performance. In this talk, I present two recent tree-based methods that go beyond predictive performance. First, an explanation method that provides a global representation of a prediction function by decomposing it into the sum of main and interaction components of arbitrary order.

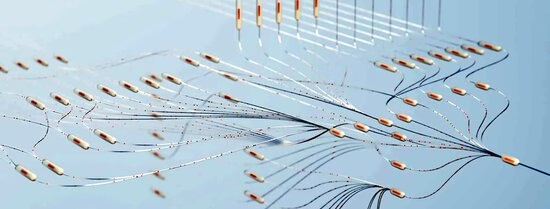

Our method extends Shapley values to higher-order interactions and is applicable to tree-based methods that consist of ensembles of low dimensional structures such as gradient-boosted trees. Second, I present adversarial random forests, a provably consistent method for density estimation and generative modeling.

With the new method, we achieve comparable or superior performance to state-of-the-art deep learning models on various tabular data benchmarks while executing about two orders of magnitude faster.

See also

- More information

Do you want to know more about the event? Contact the secretariat Econometrics at eb-secr@ese.eur.nl.